JANUARY 27, 2022

Machine intelligence builds soft machines

Soft machines—a subcategory of robotics that uses deformable materials instead of rigid links—are an emerging technology commonly used in wearable robotics and biomimetics (e.g., prosthetic limbs). Soft robots offer remarkable flexibility, outstanding adaptability, and evenly distributed force, providing safer human-machine interactions than conventional hard and stiff robots.

An essential component of soft machines is the high-precision strain sensor to monitor the strain changes of each soft body unit and achieve a high-precision control loop, while several new challenges await. First, the complex movements of soft machines require the strain sensors to monitor a wide strain range from <5% to >200%, which exceed the capabilities of conventional strain sensors. Second, to monitor the coordinated motions of a soft machine, multiple strain sensors are required to satisfy different sensing tasks for separate robotic units, which demand tedious trial-and-error tests.

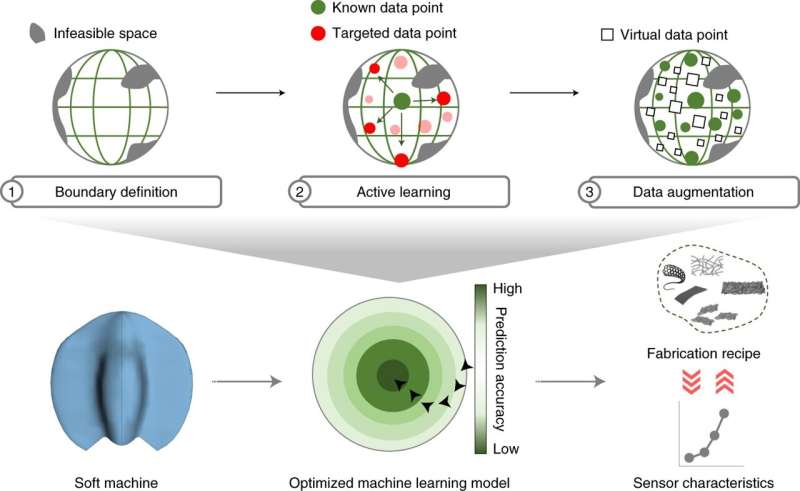

To circumvent this problem, a University of Maryland (UMD) research team led by Po-Yen Chen—a professor of chemical and biomolecular engineering at UMD with a duel appointment in the Maryland Robotics Center—has created a machine learning (ML) framework to facilitate the construction of a prediction model, which can be utilized to conduct the two-way design tasks: (1) predict sensor performance based on a fabrication recipe and (2) recommend feasible fabrication recipes for adequate strain sensors. In a nutshell, the group has designed a machine intelligence that accelerates the design of soft machines.

“What we’ve essentially created is a high-accuracy prediction software—based on a machine learning framework—capable of designing a wide range of strain sensors that can be integrated into diverse soft machines,” said Chen. “To use a food analogy, we gave a list of ingredients to a ‘chef,’ and that chef is able to design the perfect meal based on individual tastes of the customer.”

This technology can be used in the fields of advanced manufacturing, underwater robot design, prosthesis design, and beyond.

This study was published in Nature Machine Intelligence on January 26, 2022. To learn more about Dr. Chen’s work, please visit the group website.

Explore furtherStretchable pressure sensor could lead to better robotics, prosthetics

More information: Haitao Yang et al, Automatic strain sensor design via active learning and data augmentation for soft machines, Nature Machine Intelligence (2022). DOI: 10.1038/s42256-021-00434-8Journal information:Nature Machine IntelligenceProvided by University of Maryland

/https://www.thestar.com/content/dam/thestar/news/canada/2022/01/27/squirrel-guts-might-hold-the-secret-to-making-space-travel-more-practical/matt_regan.jpg)