Is Artificial Intelligence good?

You’ve read it in the papers. You’ve experienced it in life. Machines are taking over.

And they are doing it fast. Siri turned 9 on October 4 (go ahead, ask her if you don’t believe me). Tesla’s first Autopilot program is also 9. And Alexa is less than 5 years old.

Despite the fact that these AI-powered technologies haven’t graduated past their first decade, they seem to be running our lives. And they are apparently doing it better than we can.

- Just a few weeks ago, research came out explaining that Artificial intelligence was now at par with human experts when it came to making medical diagnoses.

- In a book published last month, Malcolm Gladwell quotes a Harvard study that saw an artificial intelligence program detect criminals more effectively than a panel of judges.

- And, according to Gartner and Intel, autonomous vehicles will save more than 580,000 lives between 2035 and 2045.

I wrote about the future of driving 4 years ago. I’m a big believer in the fact that autonomous cars will take over…and for the better. However, the spectacular adoption of AI technologies in the last decade has many worried. Is this going too fast? Should humans slow down this growth? Should you be worried about your job? Or should you be excited about the prospects of productivity and convenience?

How should we all be approaching the ubiquitous occurrence of Artificial Intelligence?

In this post, I’ll attempt to provide a few tools that hopefully help you approach what my friend and co-founder of Cloudera Amr Awadallah has labelled the “Sixth Wave: Automation of Decisions”.

Will Human Jobs Go Away?

You will undoubtedly find a vast body of literature that dissects the potentially harmful consequences of Artificial Intelligence investments. Just a week ago, AI and Analytics Futurist, Bernard Marr, was warning us about the “The 7 Most Dangerous Technology Trends In 2020 Everyone Should Know About”. AI Cloning and Facial Recognition made the list.

No later than this past Friday, the McKinsey Global Institute published a research piece which showed that the $20B+ invested in artificial intelligence technologies are bound to impact African Americans the most…

If you’re starting to panic, take a breath and pick up a copy of Andrew McAfee and Erik Brynjolfsson’s book: “The Second Machine Age”.

And if you don’t have time, watch this 14-min-TedTalk. In it, Andrew lays out his thesis for Artificial Intelligence impact and adoption. His points will help you rationalize this trend and will likely taper the creative headlines that predict the dawn of humanity as we know it.

Here are a couple essential take-aways:

- Automation has occurred. Don’t deny it.

- For about 200 years, people have been saying that more technology drives fewer jobs. Data proves this wrong.

“Economies in the developed world have coasted along on something pretty close to full employment,” he says but continues: “this time it’s different” because “our machines have started demonstrating skills they have never, ever had before: understanding, speaking, hearing, seeing, answering, writing, and they’re still acquiring new skills”….

How To Automate Effectively

The short story of it, is that Artificial Intelligence is good. And it can create incredible opportunities. But it does require inspection and it calls for a method.

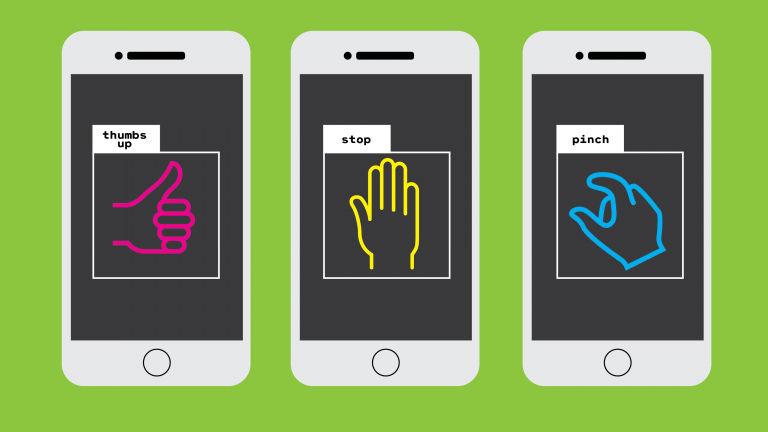

When it comes to “automating tasks” at your work, I suggest that you break them up into 3 categories:

- Automated: these are tasks that are binary, where involving people is counterproductive. Manufacturing lines have been organized around these requirements for decades (look up Robotic Process Automation or RPA).

- Augmented: these are tasks that are non-binary and require humans to check on machines OR for machines to check on humans. This will most likely cover the majority of your tasks.

- Collaborative: these are tasks that humans are uniquely positioned to initiate, advance and complete.

You might want to come up with your own model. And there again, lots of literature is available to help you. You can refer to my earlier post on the latest books in Artificial Intelligence for some key authors and reference books: I would start with Tom Davenport’s “Only Humans Need Apply”.

One thing for sure: the race for Artificial Intelligence domination is well under way. Nothing will stop it. Slowing down is not in the cards. Competing is required.

If you live in the United States and are preparing to vote in the next 12 months, this is a topic that will become more relevant as the campaign progresses: DJ Patil (former Data Scientist for President Obama) just released the results of a report on America’s investment in Science and Technology. The United States is at risk of losing its edge. If you don’t have time to read the report, be sure to at least watch this video: this is a topic which deserves attention.

14