A new machine can translate brain activity directly into written sentences

Translating up to 50 sentences at once, it’s about as accurate as human transcription

You′ve probably been there: wanting to text someone quickly, but your hands are busy, maybe holding the groceries or cooking.

Siri, Alexa, and other virtual assistants have provided one new layer of interaction between us and our devices, but what if we could move beyond even that? This is the premise of some brain-machine interfaces (BMIs). We covered these at Massive before, and some of the potentials and limitations surrounding them.

Using BMIs, people are able to move machines, and control virtual avatars without moving a muscle. This is usually done by accessing the region of the brain responsible for a specific movement and then decoding that electrical signal into something a computer can understand. One area that was still hard to decode, however, was speech itself.

But now, scientists from the University of California in San Francisco have now reported a way to translate human brain activity directly into text.

Joseph Makin and their team used recent advances in a type of algorithm that deciphers and translates one computer language into another (one that is the basis for a lot of human language translation software). Based on those improvements in the software, the scientists designed a BMI that is able to translate a full sentence worth of brain activity into an actual written sentence.

Four participants, who already had brain implants for treating seizures, trained this computer algorithm by reading sentences out loud for about 30 minutes while the implants recorded their brain activity. The algorithm is composed of a type of artificial intelligence that looks at information that needs to be in a specific order to make sense (like speech) and make predictions of what comes next.

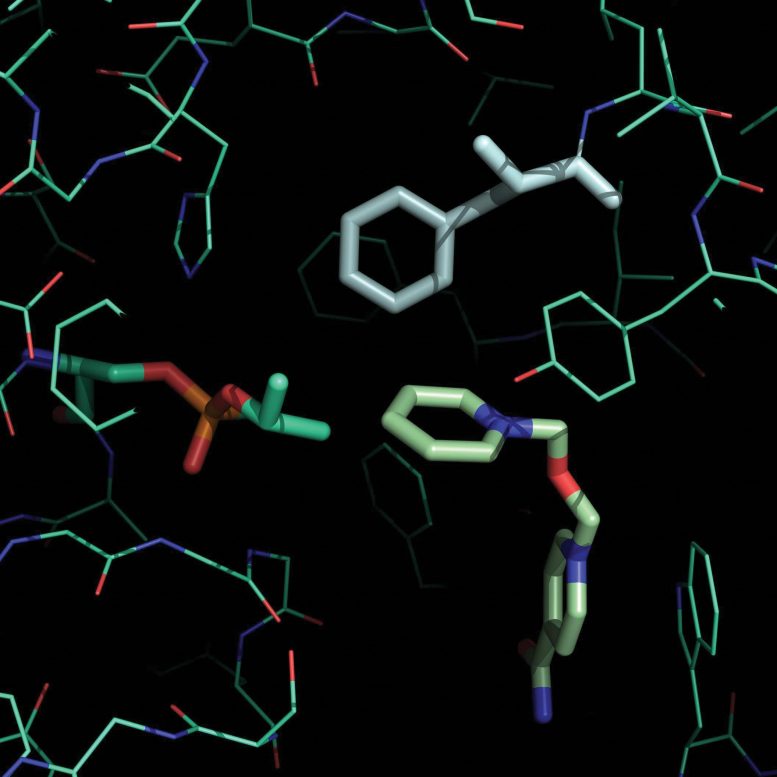

In that sense, this AI is learning sentences, and is then able to create a representation of what regions of the brain are being activated, in what order and with what intensity, to create that sentence. This is the encoder part of the BMI.

The encoder is followed by a different AI that is able to understand that computer-generated representation and translate that into text – the decoder. This encoder-decoder duo is doing for speech what other BMIs do for movement: pairing a specific set of brain signals and transforming that into something computers understand and can act on.

This interface was able to translate 30 to 50 sentences at the time, with an error rate similar to that of professional-level speech transcription. The team also ran another test in which they trained the BMI on the speech from one person before training on another participant. This increased the accuracy of the overall translation, showing that the whole algorithm could be used and improved by multiple people. Lastly, based on the information gathered by the brain implants, the study was also able to expand our knowledge of how very specific areas of the brain are activated when we speak.

In the realm of BMIs, the ideal is always to be able to get a single brain signal and translate directly into computer code, reducing any intermediary steps. However, for most BMIs, including speech, that is a huge challenge. The speech BMIs available before this study were only able to distinguish small chunks of speech, like individual vowels and consonants – and even then with an accuracy of only about 40%.

One of the reasons why this new BMI is more efficient than past attempts is a shift of focus. Instead of small chunks of speech, they focused on entire words. So instead of having to distinguish between specific sounds – like “Hell”, “o”, Th”, “i”, “a,” and “go,” the machine can use full words – “Hello” and “Thiago” – to understand the difference between them.

Although the best case scenario would be to train the algorithm with the full scope of the English language, for this study the authors constrain the available vocabulary to 250 different words. Maybe not enough to cover the complete works of Shakespeare, but definitely an improvement from virtually most BMIs. Most BMIs currently use some form of virtual keyboards, with the person moving a virtual cursor with their minds and “typing” in this keyboard, one digit at the time.

.jpg?auto=compress%2Cformat)

There is a pretty glaring difference between reading brain activity from a brain implant and anything we could do on a larger scale. However, this study opens up fascinating new directions. These implants were trained on about 30 minutes of speech, but the implants will still be there. By continuously examining data, scientists might be able to create a library worth of training sets for BMIs, which as shown, could then be translated to someone else. There is also the possibility of expanding this study to different languages, which would teach us more how speech and its representations in the brain might vary across languages.

Much more research will be needed to transform this technology into something that we could all use. It’s likely that this technology will first used to improve the lives of paralyzed patients, and for other clinical applications. There, the benefits are immense, enabling communication with speed and accuracy we have not been yet been able to achieve.

Whether this becomes another function of Siri or Alexa will depend mostly on what advances we see in capturing brain activity, especially if we’re able to do so without brain implants.