If you’re spending a bunch more time lately indoors using fitness apps to maintain your sanity you might not realize that you can broadcast your heart rate right from your Garmin watch straight to your favorite app – thus skipping the need for a separate heart rate strap/sensor. Garmin has long been one of the few device makers to actually allow this, but if you’ve got a COROS watch or the new Timex R300 you can also do the same. More on those details at the end of the post.

Garmin offers two modes for broadcasting heart rate:

1) Over ANT+ (for virtually every wearable ever from them)

2) Over Bluetooth Smart (for most newer 2019/2020 wearables)

The modes vary a bit, so I’ll quickly run through how to do it in both modes. In general, if you’re using a phone/tablet/Apple TV/Mac, it’s going to be easier to do via Bluetooth Smart. Whereas if you’re using a PC it’s going to be easier to do via ANT+ (again, generally). This also works on most exercise gear too, even a Peloton bike (which accepts both ANT+ & Bluetooth Smart HR connections).

Now, the point of this series is quick tips – not DCR-length crazy tips. So, here’s a video I put together that shows the tip in 5 minutes:

Or, you can simply scroll on down below for the written details for each watch/broadcasting type.

BLUETOOTH BROADCASTING:

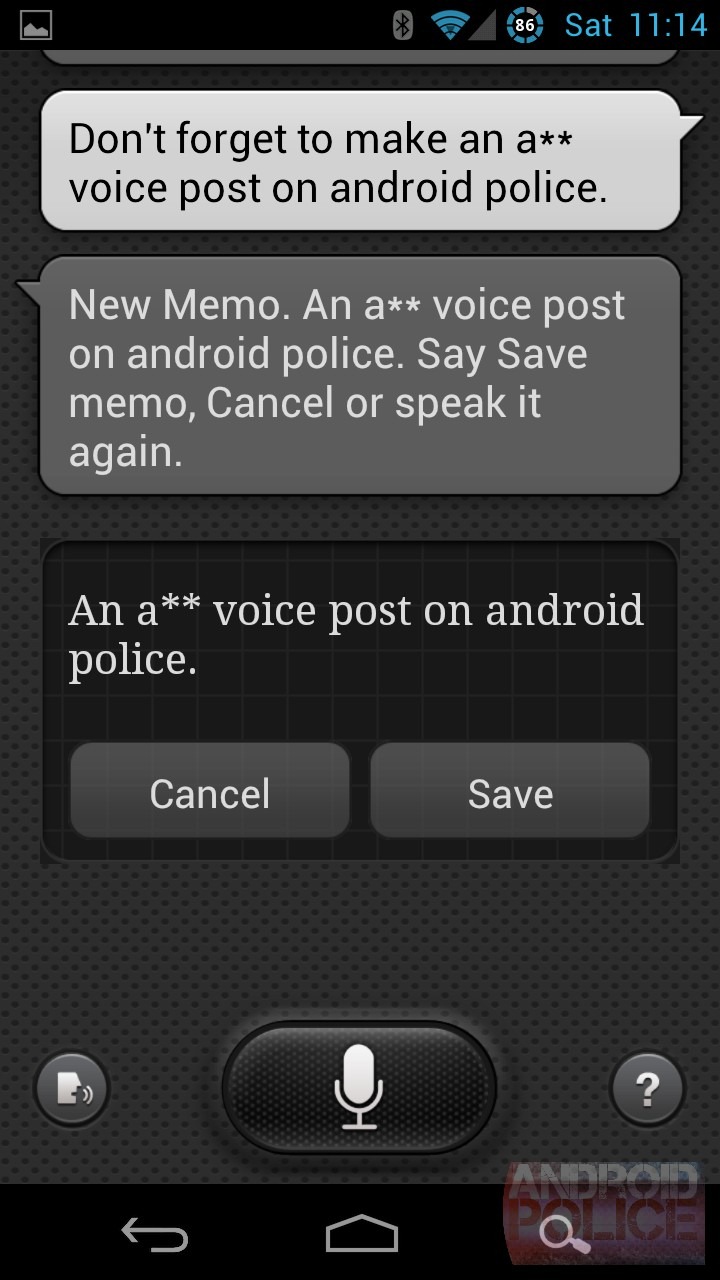

If you’ve got a newer Garmin watch, you’re in luck. Those support Bluetooth Smart transmission using a new ‘Virtual Run’ profile. While designed for running on a treadmill (as it also includes running pace and cadence), it actually works just fine for any activity you want – including cycling on Zwift. I detailed the whole feature here in a post a few months ago.

This function is *only* available on newer Garmin wearables, likely due to hardware architecture limitations on older chipsets. Here’s what’s supported:

Supported watches: Garmin Forerunner 245, Forerunner 945, Fenix 6 Series (it might also work with the Tactix Charlie and Quatix 6 Series, but I don’t have either)

If you don’t have one of the above watches, you’ll need to use the ANT+ broadcasting method down below. Or, if you found this post a year or so in the future and have some mysterious new watch not listed above, it probably supports this method.

To enable it, on your watch go to start a new sport, so press the upper right button once, then scroll all the way down until you find ‘Virtual Run’ (you might have to add it by first pressing the “+” option at the bottom of the list):

Once you’ve got Virtual Run selected, press the down arrow past the informational message to select OK. At this point you’ll be at a screen like this:

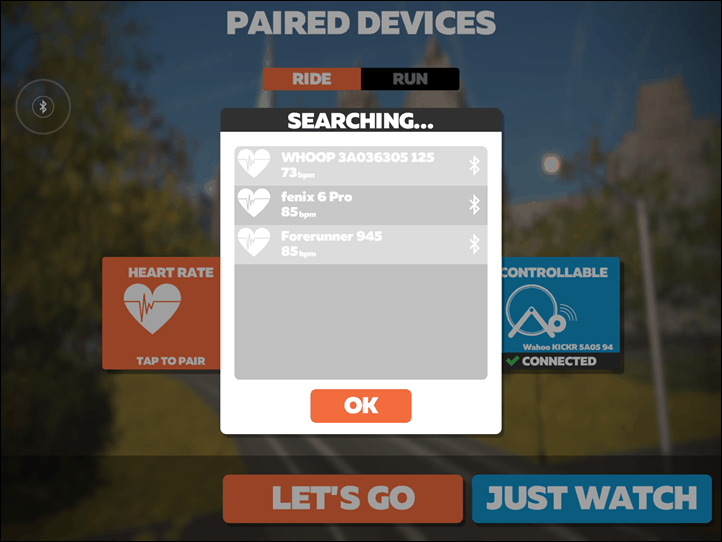

Now, simply open up your app of choice, and you should see the Garmin watch listed, by the name of the watch itself:

Just select that as usual, and you’re good to go!

Meanwhile, on the Garmin watch, you’ll likely want to start the activity. You can discard this later on, but otherwise that pairing window times out after about 30-40 minutes. Whereas if you simply start the activity it’ll broadcast in the background. Then afterwards just stop and discard it.

Now the only downside to this is that if you wanted to record a legit cycling activity (including connecting to a power meter or cycling sensors), you can’t do that in the Virtual Run mode. Which in turn means that if you wanted to record aforementioned legit activity so that it shows up as a legit workout in your training log so you get all the physiological training load metrics updated – it won’t do that.

For that, you should use the ANT+ broadcasting method. However, I did ask Garmin whether they’ll simply enable Bluetooth Smart broadcasting in a similar manner to the existing ANT+ method (or implement a virtual cycling option), and they said it’s already in the cards. But they don’t have an exact timeframe for when such a software update might happen. So until then, we’ll have to make do with the above method.

And indeed, I’ve used it a number of times in a pinch. Obviously there can be downsides to optical HR sensors, but I find that accuracy on an indoor cycling trainer tends to be very good (primarily because there’s no road vibration, or running cadence to deal with). Again, it’s an option.

ANT+ BROADCASTING:

Don’t have the fanciest new Garmin? No worries, you can still do this via ANT+ instead. Virtually every Garmin wearable ever made supports this. The steps might vary slightly, but they’ve been doing this for years upon years, even with the cheapest wearables.

The downside with ANT+ of course is that it won’t work on iOS or Apple TV devices (but will on many Android devices). If you’ve got a PC or Mac, you’ll need an ANT+ USB adapter, which if you Zwift you might have anyway already. It works natively on Peloton bikes (but not just the digital app), as they support ANT+ heart rate connections.

The basics steps below are basically the same on any Garmin wearable. Now technically there’s two ways to do this with ANT+:

A) Ad-hoc broadcasting only when you want it

B) Turn on broadcasting every time you start a workout

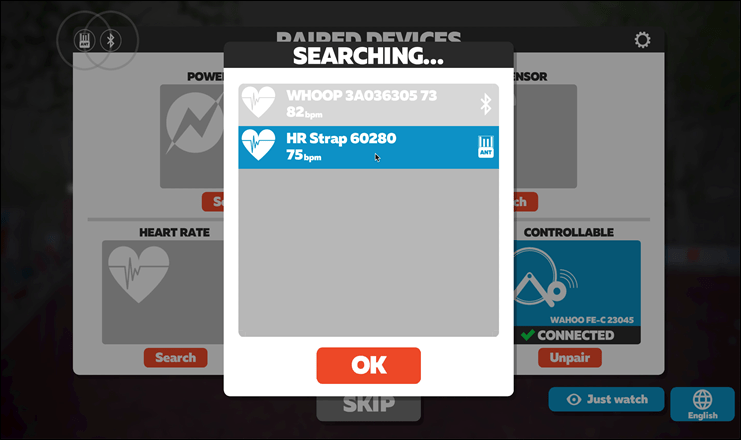

I’ll show you the ad-hoc steps, and then give you the one-line option for turning it on every time you start a sport. Hold down the middle settings button > Sensors & Accessories (in some watches you can skip this menu) > Wrist Heart Rate > Broadcast Heart Rate:

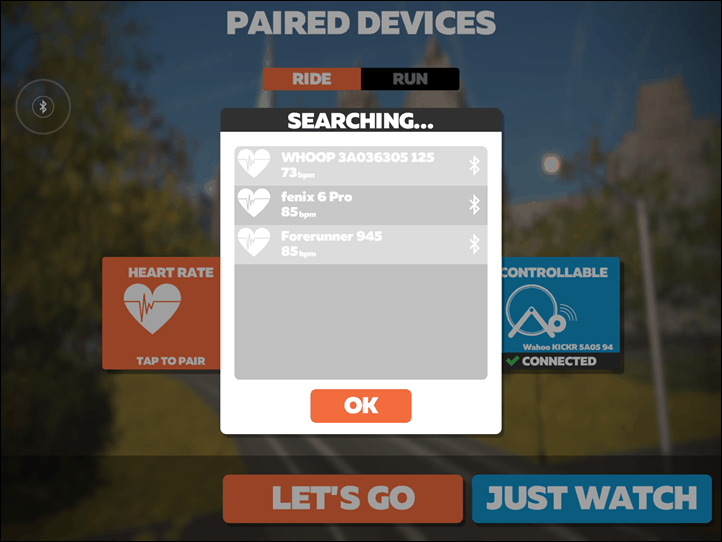

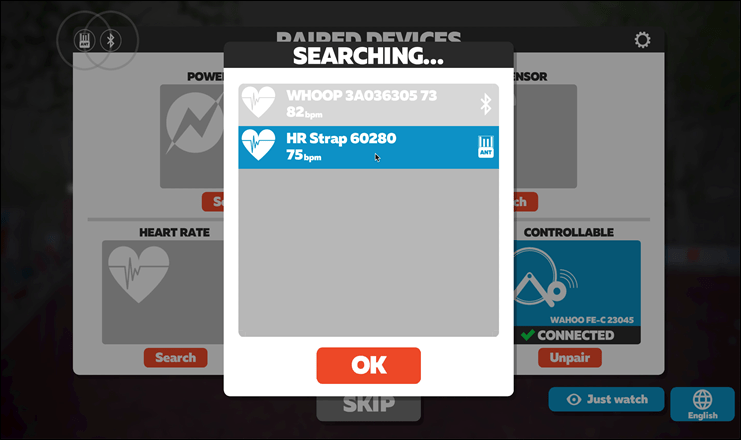

Now, this will be broadcasting your heart rate over ANT+, so you can see it easily from any app compatible with ANT+. For example, here it is on a MacBook with an ANT+ adapter. Note the ANT+ icon, and it even has a unique ANT+ ID that’ll always remain the same (so it’ll automatically connect next time, just like any other HR strap).

When you’re done, you’ll simply hit the escape button to end the broadcast.

But my favorite option is to simply enable it anytime I start a workout (no matter the type). The battery impact here is negligible. Again, it’s under Settings > Sensors & Accessories (or skipped again on some watches) > Wrist Heart Rate > Broadcast During Activity set to ‘On’:

And now if you go to start a workout you’ll notice the HR icon has a little transmission signal coming off of it:

That’s it – the result is the same and it’ll be seen in any apps/devices that support ANT+. This can also be used to pair to bike computers like a Garmin Edge, Wahoo ELEMNT series, or basically anything. Super practical if you forgot your HR strap or such.

WRAP-UP:

Finally, as I mentioned earlier, there are some other watches that can broadcast your optical HR out as well. They are as follows:

Timex Ironman R300 GPS Watch: To enable this, press the middle button > Settings > Workout > Broadcast HR > Set to ‘ON’ (though in practice, I’m struggling a bit to make this work, so got some more research to do there.)

Whoop 3.0: While not a watch per se, this wearable will broadcast your HR over Bluetooth Smart once enabled from the app. It’ll then remain on at all times for when an app wants to connect to it. It doesn’t materially impact battery life since it only transmits it once required by the app.

Whereas devices from Apple, Fitbit, Suunto, Samsung, and countless others don’t broadcast your HR. Notably, while some Polar wearables do broadcast your HR on some older watches, it really only works reliably with other Polar gear. I wouldn’t recommend using it for non-Polar connectivity.

With that – hopefully you found this post useful, and the shorter format helpful as well for these sort of brief how-to type items.